No products in the cart.

Thi Công Nội Thất Cao Cấp | Nội Thất Quyết Loan | Đồ Gỗ Cao Cấp

Thư Viện Ảnh Sản Phẩm Nội Thất

Để Lại Thông Tin Quyết Loan Sẽ Gọi Ngay!

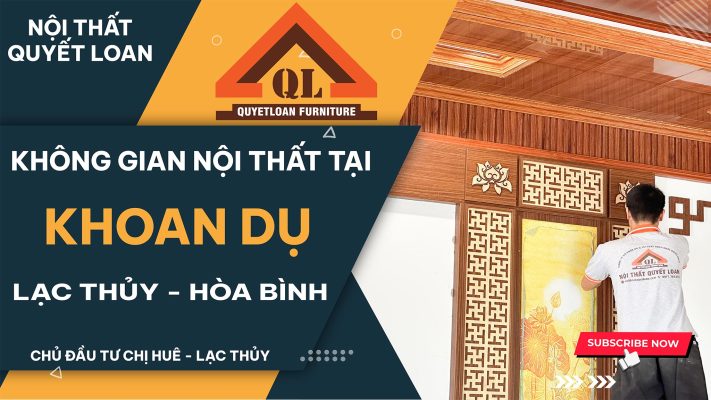

Công Trình Tiêu BiểuXem Thêm

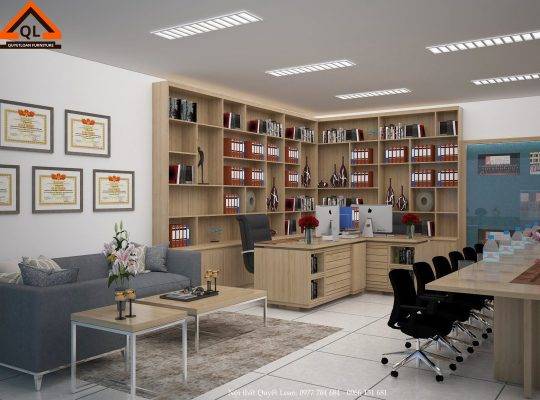

Sản Phẩm Thi Công Nội Thất

Nội Thất Phòng KháchXem tất cả

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

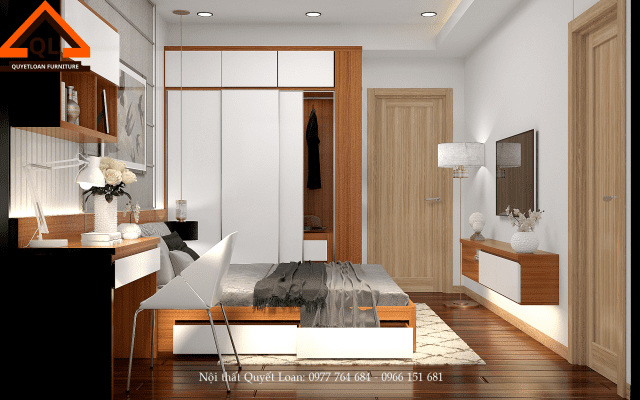

Nội Thất Phòng NgủXem tất cả

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Liên Hệ

Combo Nội Thất Theo Phong Cách

Dự Án

Khách Hàng

Tại Sao Chọn Chúng Tôi

Quyết Loan Là Đơn Vị Chuyên Tư Vấn – Thiết Kế – Thi Công Nội Thất Cao Cấp

Thiết kế nội thất không chỉ là việc sắp xếp không gian mà còn là nghệ thuật kết hợp giữa tính thẩm mỹ và tính ứng dụng. Đối với những người yêu thích sự sang trọng và đẳng cấp, việc lựa chọn đơn vị thi công nội thất phù hợp là vô cùng quan trọng. Trong đó, Quyết Loan từ lâu được biết đến là một trong những địa chỉ hàng đầu về thi công nội thất cao cấp.

Mang Đẳng Cấp Đến Mọi Không Gian Sống

Quyết Loan không chỉ đơn thuần là một đơn vị thi công nội thất, mà còn là người bạn đồng hành tin cậy trong việc biến những ý tưởng thiết kế thành hiện thực. Với đội ngũ kiến trúc sư và thợ lành nghề, chúng tôi cam kết mang đến cho khách hàng những không gian sống tinh tế, sang trọng và đẳng cấp nhất.

Sự Đa Dạng Và Độc Đáo Trong Thiết Kế

Điểm mạnh của Quyết Loan chính là sự đa dạng và độc đáo trong thiết kế. Chúng tôi không chỉ tập trung vào việc đáp ứng nhu cầu cơ bản mà còn luôn tìm kiếm sự sáng tạo mới mẻ. Từ phong cách hiện đại đến cổ điển, từ không gian nhỏ đến không gian lớn, chúng tôi luôn tự tin đáp ứng mọi yêu cầu của khách hàng.

Chất Lượng Và Uy Tín Hàng Đầu

Với hơn một thập kỷ hoạt động trong lĩnh vực thi công nội thất cao cấp, Quyết Loan đã và đang khẳng định vị thế của mình qua hàng loạt dự án thành công. Chất lượng sản phẩm và dịch vụ luôn được đặt lên hàng đầu, đồng thời, uy tín và sự hài lòng của khách hàng là tiêu chí hàng đầu mà chúng tôi hướng đến.

Đội Ngũ Chuyên Nghiệp Và Nhiệt Huyết

Đội ngũ của Quyết Loan không chỉ là những nhà thiết kế và thợ lành nghề, mà còn là những người đam mê nghề nghiệp và luôn sẵn lòng đồng hành cùng khách hàng từ khâu tư vấn đến sau khi hoàn thiện dự án. Sự chuyên nghiệp và nhiệt huyết của đội ngũ là một trong những yếu tố quan trọng giúp Quyết Loan khẳng định vị thế trong lòng khách hàng.

Thi Công Nội Thất Cao Cấp

Tóm lại, với kinh nghiệm, sự đa dạng trong thiết kế, chất lượng sản phẩm và dịch vụ hàng đầu, cùng với đội ngũ chuyên nghiệp và nhiệt huyết, Quyết Loan tự hào là địa chỉ uy tín và đáng tin cậy trong lĩnh vực thi công nội thất cao cấp. Hãy để chúng tôi biến những ý tưởng của bạn thành hiện thực và mang đến cho không gian sống của bạn một dấu ấn đẳng cấp và sang trọng nhất!

Đồ Gỗ Cao Cấp

Hãy liên hệ với chúng tôi ngay hôm nay để bắt đầu hành trình thiết kế nội thất của bạn. “Nội Thất Quyết Loan – Nâng Tầm Đẳng Cấp”.